Comprehensive Guide to PySpark 3.5.3: Unlocking Powerful Data Processing in Python

Application Development SoftwareExplore the PySpark 3.5.3 documentation for powerful data processing in Python. Learn about Spark SQL, DataFrames, and best practices to optimize your workflows.

About PySpark

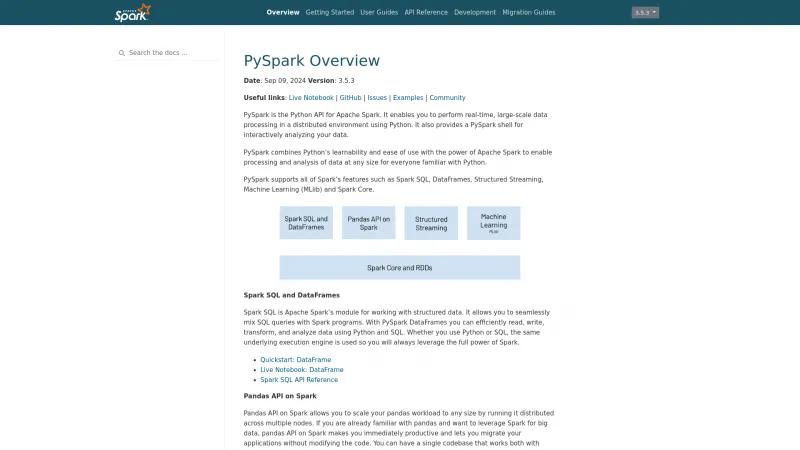

The PySpark 3.5.3 documentation provides an exceptional overview of one of the most powerful tools for large-scale data processing in Python. It effectively combines the simplicity of Python with the robust capabilities of Apache Spark, making it an invaluable resource for data professionals.

The documentation is well-structured, guiding users through essential components such as Spark SQL, DataFrames, and Structured Streaming. Each section is clearly articulated, allowing both beginners and seasoned users to navigate the complexities of data processing with ease. The inclusion of live notebooks and quickstart guides enhances the learning experience, enabling users to apply concepts in real-time.

One of the standout features is the Pandas API on Spark, which facilitates a seamless transition for those familiar with pandas. This thoughtful integration allows users to scale their workloads without the need for extensive code modifications, making it a practical choice for both development and production environments.

Moreover, the documentation emphasizes best practices, recommending the use of DataFrames over RDDs for better performance and ease of use. This guidance is crucial for users aiming to optimize their data processing tasks.

Overall, the PySpark 3.5.3 documentation is a comprehensive and user-friendly resource that empowers users to harness the full potential of Apache Spark. It is a must-read for anyone looking to enhance their data processing capabilities in Python.

Leave a review

User Reviews of PySpark

No reviews yet.